CAI4CAI

We are an academic research group focusing on Contextual Artificial Intelligence for Computer Assisted Interventions.

CAI4CAI is embedded in the School of Biomedical Engineering & Imaging Sciences at King’s College London, UK

About us

We are based at King’s College London

Our engineering research aims at improving surgical & interventional sciences

We take a multidisciplinary, collaborative approach to solve clinical challenges (📖)

Our labs are located in St Thomas’ hospital, a prominent London landmark

We design learning-based approaches for multi-modal reasoning

Medical imaging is a core source of information in our research

We design intelligent systems exploiting information captured by safe light

We thrive at providing the right information at the right time to the surgical team and embrace human/AI interactions (📖)

Strong industrial links are key to accelerate translation of cutting-edge research into clinical impact

We support open source, open access and involve patients in our research (👋)

Recent posts

Collaborative research

NeuroHSI

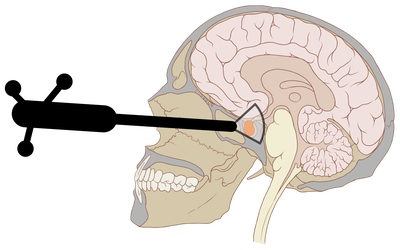

A prospective observational study to evaluate the use of an intraoperative hyperspectral imaging system in neurosurgery.

NeuroPPEye

A prospective observational study to evaluate intraoperative hyperspectral imaging for real-time quantitative fluorescence-guided surgery of low-grade glioma.

CDT AE-PSI

The EPSRC Centre for Doctoral Training in Advanced Engineering in Personalised Surgery & Intervention (CDT AE-PSI) is an innovative three-and-a-half year PhD training program aiming to deliver translational research and transform patient pathways.

CDT SMI

Through a comprehensive, integrated training programme, the Centre for Doctoral Training in Smart Medical Imaging trains the next generation of medical imaging researchers.

FAROS

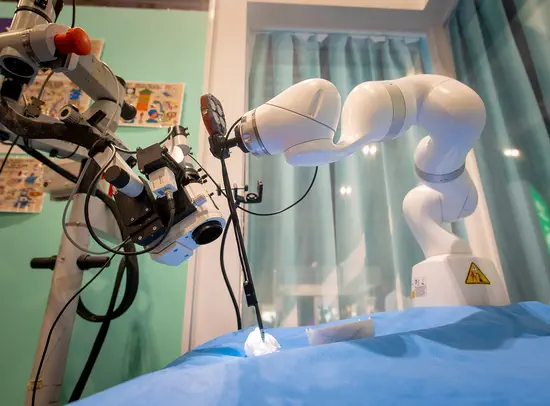

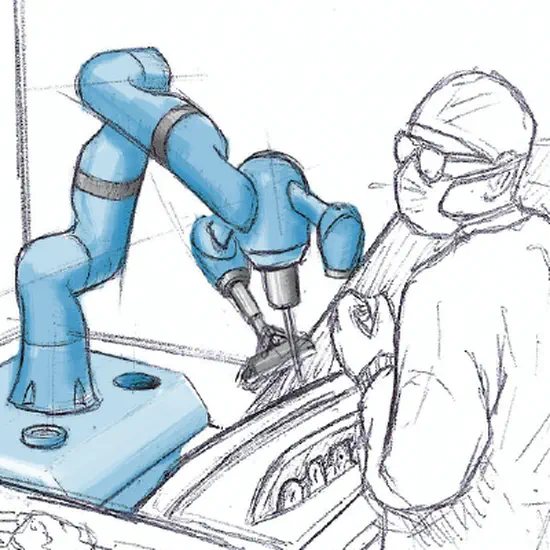

The Functionally Accurate RObotic Surgery (FAROS) H2020 project aims at improving functional accuracy through embedding physical intelligence in surgical robotics.

GIFT-Surg

The GIFT-Surg project is an international research effort developing the technology, tools and training necessary to make fetal surgery a viable possibility.

icovid

The icovid project focuses on AI-based lung CT analysis providing accurate quantification of disease and prognostic information in patients with suspected COVID-19 disease.

K-CSC

Up to 100 King’s-China Scholarship Council PhD Scholarship programme (K-CSC) joint scholarship awards are available per year to support students from China who are seeking to start an MPhil/PhD degree at King’s College London.

MRC DTP BiomedSci

The integrated and multi-disciplinary approach of the MRC Doctoral Training Partnership in Biomedical Sciences (MRC DTP BiomedSci) to medical research offers a wealth of cutting-edge PhD training training opportunities in fundamental discovery science, translational research and experimental medicine.

TRABIT

The Translational Brain Imaging Training Network (TRABIT) is an interdisciplinary and intersectoral joint PhD training effort of computational scientists, clinicians, and the industry in the field of neuroimaging.

Wellcome / EPSRC CME

The Wellcome / EPSRC Centre for Medical Engineering combines fundamental research in engineering, physics, mathematics, computing, and chemistry with medicine and biomedical research.

Spin-outs and industry collaborations

Pathways to clinical impact

Moon Surgical

Moon Surgical has partnered with us to develop machine learning for computer-assisted surgery. More information on our press release.

Hypervision Surgical Ltd

Following successful in-patient clinical studies of CAI4CAI’s translational research on computational hyperspectral imaging system for intraoperative surgical guidance, Hypervision Surgical Ltd was founded by Michael Ebner, Tom Vercauteren, Jonathan Shapey, and Sébastien Ourselin.

In collaboration with CAI4CAI, Hypervision Surgical’s goal is to convert the AI-powered imaging prototype system into a commercial medical device to equip clinicians with advanced computer-assisted tissue analysis for improved surgical precision and patient safety.

Intel (previously COSMONiO)

Intel is the industrial sponsor of Theo Barfoot’s’s PhD on Active and continual learning strategies for deep learning assisted interactive segmentation of new databases.

Mauna Kea Technologies

Tom Vercauteren worked for 10 years with Mauna Kea Technologies (MKT) before resuming his academic career.

Medtronic

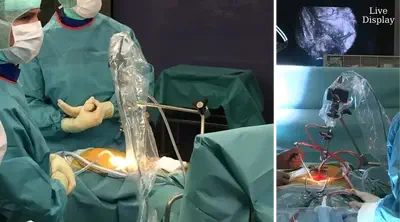

Medtronic is the industrial sponsor of Tom Vercauteren’s Medtronic / Royal Academy of Engineering Research Chair in Machine Learning for Computer-Assisted Neurosurgery.

Open research

Exemplar outputs of our research

LBR-Stack: ROS 2 and Python Integration of KUKA FRI for Med and IIWA Robots

The LBR-Stack integrates the KUKA Med and IIWA robots into ROS 2 and Python for accelerated research and deployment. The ROS 2 aspects are designed for deterministic execution with mission critical hard real-time applications in mind. Backward compatability across robot vendor driver versions is provided to facilitate broader community support.

CAI4CAI on github

We support open source and typically use github to disseminate our research. Repositories can be found on our group organisation (CAI4CAI) or individual member github profiles.

Transferring Relative Monocular Depth to Surgical Vision with Temporal Consistency

This work makes significant strides in the field of monoocular endoscopic depth perception, drastically improving on previous methods. The task of monocular depth perception demonstrates a nuanced understanding of the surgical scene, and could act as a vital building block in future technologies. To acheive our results, we leverage large vision transformers trained on huge natural image datasets and fine tuned to our ensembled meta dataset of sugical videos. Read more about it in our pre-print, or get our model here.

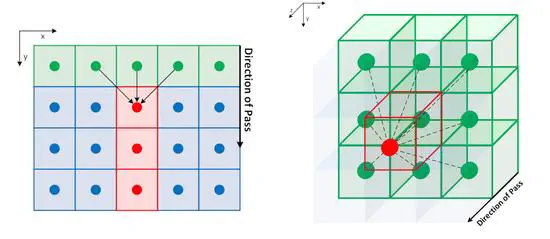

FastGeodis: Fast generalised geodesic distance transform

FastGeodis is an open-source package that provides efficient implementations for computing Geodesic and Euclidean distance transforms (or a mixture of both), targetting efficient utilisation of CPU and GPU hardware.

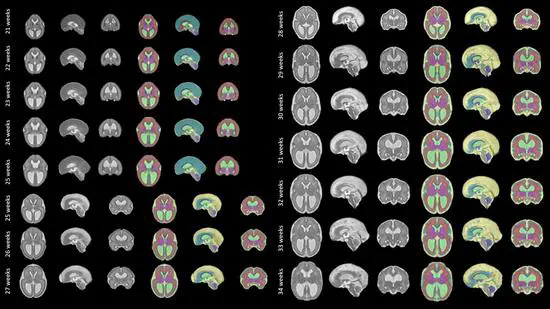

A spatio-temporal atlas of the developing fetal brain with spina bifida aperta

We make publicly available a spatio-temporal fetal brain MRI atlas for SBA. This atlas can support future research on automatic segmentation methods for brain 3D MRI of fetuses with SBA.

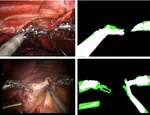

Image Compositing for Segmentation of Surgical Tools without Manual Annotations

We have recently published the paper “Garcia-Peraza-Herrera, L. C., Fidon, L., DEttorre, C. Stoyanov, D., Vercauteren, T., Ourselin, S. (2021). Image Compositing for Segmentation of Surgical Tools without Manual Annotations. Transactions in Medical Imaging (📖)”. Inspired by special effects, we introduce a novel deep-learning method to segment surgical instruments in endoscopic images.

MONAI (Medical Open Network for AI): PyTorch for medical imaging

We contribute to MONAI, a PyTorch-based, open-source framework for deep learning in healthcare imaging, part of PyTorch Ecosystem.

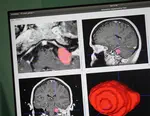

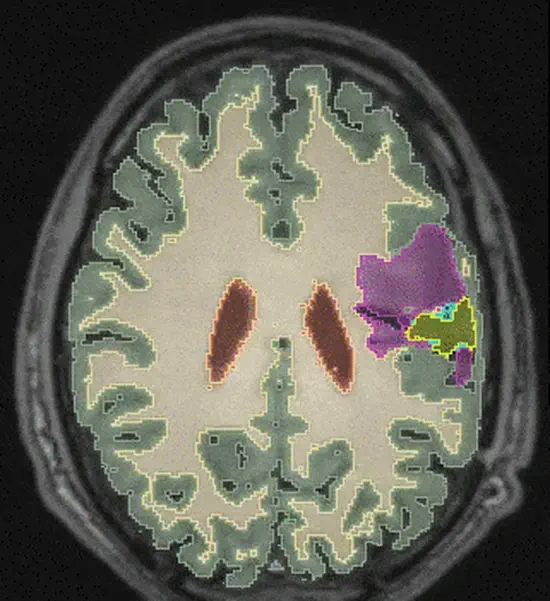

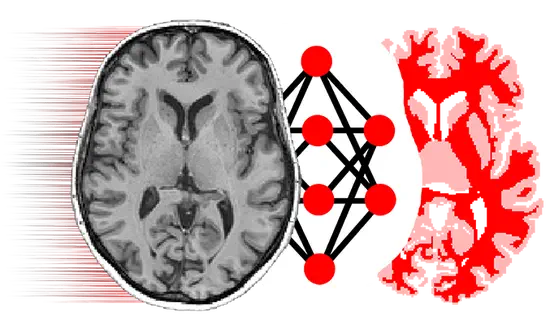

Learning joint Segmentation of Tissues And Brain Lesions (jSTABL) from task-specific hetero-modal domain-shifted datasets

Open source PyTorch implementation of “Dorent, R., Booth, T., Li, W., Sudre, C. H., Kafiabadi, S., Cardoso, J., … & Vercauteren, T. (2020). Learning joint segmentation of tissues and brain lesions from task-specific hetero-modal domain-shifted datasets. Medical Image Analysis, 67, 101862 (📖).”

DeepReg: Medical image registration using deep learning

DeepReg is a freely available, community-supported open-source toolkit for research and education in medical image registration using deep learning (📖).

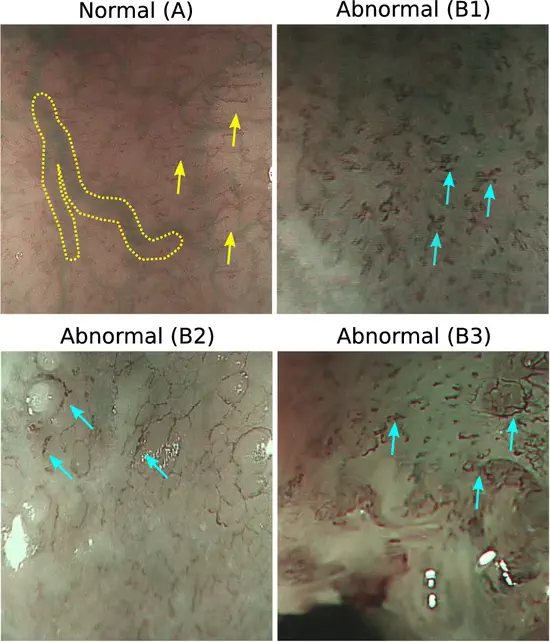

Intrapapillary Capillary Loop (IPCL) Classification

We provide open source code and open access data for our paper “García-Peraza-Herrera, L. C., Everson, M., Lovat, L., Wang, H. P., Wang, W. L., Haidry, R., … & Vercauteren, T. (2020). Intrapapillary capillary loop classification in magnification endoscopy: Open dataset and baseline methodology. International journal of computer assisted radiology and surgery, 1-9 (📖).”

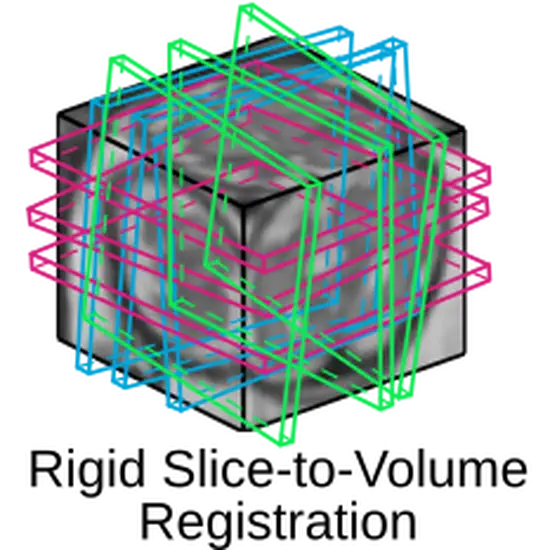

Fetal brain MRI reconstruction (NiftyMIC)

NiftyMIC is a Python-based open-source toolkit for research developed within the GIFT-Surg project to reconstruct an isotropic, high-resolution volume from multiple, possibly motion-corrupted, stacks of low-resolution 2D slices. Read “Ebner, M., Wang, G., Li, W., Aertsen, M., Patel, P. A., Aughwane, R., … & David, A. L. (2020). An automated framework for localization, segmentation and super-resolution reconstruction of fetal brain MRI. NeuroImage, 206, 116324 (📖).”

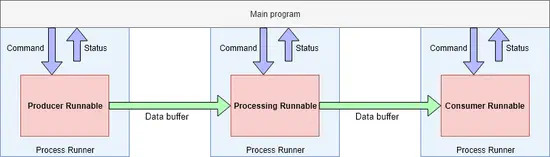

Python Unified Multi-tasking API (PUMA)

PUMA provides a simultaneous multi-tasking framework that takes care of managing the complexities of executing and controlling multiple threads and/or processes.

GIFT-Grab: Simple frame grabbing API

GIFT-Grab is an open-source C++ and Python API for acquiring, processing and encoding video streams in real time (📖).

Open positions

You can browse our list of open positions (if any) here, as well as get an insight on the type of positions we typically advertise by browsing through our list of previous openings. We are also supportive of hosting strong PhD candidates and researchers supported by a personal fellowship/grant.

Please note that applications for the listed open positions need to be made through the University portal to be formally taken into acount.

.js-id-open-positionPost overview:

- Focus: Support surgical imaging data analysis tasks and work on computational algorithms to help streamline data annotation

- Line manager: Tom Vercauteren

- Salary: Grade 5, £38,482 - £43,249 or Grade 6, £44,355 – £47,882 (max SP34) per annum including LWA depending on experience

- Duration: Fixed term contract until 30 Sep 2025

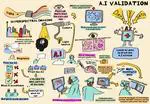

On 21st September we held our fourth ‘Science for Tomorrow’s Neurosurgery’ PPI group meeting online, with presentations from Oscar, Matt and Silvère. Presentations focused on an update from the NeuroHSI trial, with clear demonstration of improvements in resolution of the HSI images we are now able to acquire; this prompted real praise from our patient representatives, which is extremely reassuring for the trial going forward. We also took this opportunity to announce the completion of the first phase of NeuroPPEYE, in which we aim to use HSI to quantify tumour fluorescence beyond that which the human eye can see. Discussions were centered around the theme of “what is an acceptable number of participants for proof of concept studies,” generating very interesting points of view that ultimately concluded that there was no “hard number” from the patient perspective, as long as a thorough assessment of the technology had been carried out. This is extremely helpful in how we progress with the trials, particularly NeuroPPEYE, which will begin recruitment for its second phase shortly. Once again, the themes and discussions were summarized in picture format by our phenomenal illustrator, Jenny Leonard (see below) and we are already making plans for our next meeting in February 2024!

This video presents work lead by Martin Huber. Deep Homography Prediction for Endoscopic Camera Motion Imitation Learning investigates a fully self-supervised method for learning endoscopic camera motion from readily available datasets of laparoscopic interventions. The work addresses and tries to go beyond the common tool following assumption in endoscopic camera motion automation. This work will be presented at the 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2023).

This video presents work lead by Mengjie Shi focusing on learning-based sound-speed correction for dual-modal photoacoustic/ultrasound imaging. This work will be presented at the 2023 IEEE International Ultrasonics Symposium (IUS).

You can read the preprint on arXiv: 2306.11034 and get the code from GitHub.

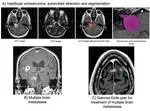

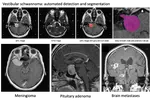

Recently, we organized a Public and Patient Involvement (PPI) group with Vestibular Schwannoma patients to understand their perspectives on an patient-centered automated report. Partnering with the British Acoustic Neuroma Association (BANA), we recruited participants by circulating a form within the BANA community through their social media platforms.

Post overview:

- Focus: Translational research on hyperspectral-based quantitative fluorescence imaging linked with a neurosurgery clinical study

- Line manager: Tom Vercauteren

- Clinical collaborator: King’s College Hospital

- Industry collaborator: Hypervision Surgical

- Salary: Grade 6, £41,386-£48,414 or Grade 7 £49,737-£55,306 per annum, including London Weighting Allowance

CAI4CAI members and alumni are leading the organization of the new edition of the cross-modality Domain Adaptation challenge (crossMoDA) for medical image segmentation Challenge, which will runs as an official challenge during the Medical Image Computing and Computer Assisted Interventions (MICCAI) 2023 conference.

The four co-founders of Hypervison Surgical, a King’s spin-out company, have been awarded the Cutlers’ Surgical Prize for outstanding work in the field of instrumentation, innovation and technical development.

The Cutlers’ Surgical Prize is one of the most prestigious annual prizes for original innovation in the design or application of surgical instruments, equipment or practice to improve the health and recovery of surgical patients.

This video presents work lead by Christopher E. Mower. OpTaS is an OPtimization-based TAsk Specification Python library for trajectory optimization and model predictive control. The code can be found at https://github.com/cmower/optas. This work will be presented at the 2023 IEEE International Conference on Robotics and Automation (ICRA).

We are working to develop new technologies that combine a new type of camera system, referred to as hyperspectral, with Artificial Intelligence (AI) systems to reveal to neurosurgeons information that is otherwise not visible to the naked eye during surgery. Two studies are currently bringing this “hyperspectral” technology to operating theatres. The NeuroHSI study uses a hyperspectral camera attached to an external scope to show surgeons critical information on tissue blood flow and distinguishes vulnerable structures which need to be protected. The NeuroPPEye study is developing this technology adapted for surgical microscopes, to guide tumour surgery.

This video presents work lead by Christopher E. Mower. The ROS-PyBullet Interface is a framework between the reliable contact simulator PyBullet and the Robot Operating System (ROS) with additional utilities for Human-Robot Interaction in the simulated environment. This work was presented at the Conference on Robot Learning (CoRL), 2022. The corresponding paper can be found at PMLR.

Muhammad led the development of FastGeodis, an open-source package that provides efficient implementations for computing Geodesic and Euclidean distance transforms (or a mixture of both), targetting efficient utilisation of CPU and GPU hardware. This package is able to handle 2D as well as 3D data, where it achieves up to a 20x speedup on a CPU and up to a 74x speedup on a GPU as compared to an existing open-source library that uses a non-parallelisable single-thread CPU implementation. Further in-depth comparison of performance improvements is discussed in the FastGeodis documentation.

WiM-WILL is a digital platform that provides MICCAI members to share their career pathways to the outside world in parallel to MICCAI conference. Muhammad Asad (interviewer) and Navodini Wijethilake (interviewee) from our lab group participated in this competition this year and secured the second place. Their interview was focused on overcoming challenges in research as a student. The link to the complete interview is available below and on youtube.

We are working to develop new technologies that combine a new type of camera system, referred to as hyperspectral, with Artificial Intelligence (AI) systems to reveal to neurosurgeons information that is otherwise not visible to the naked eye during surgery. Two studies are currently bringing this “hyperspectral” technology to operating theatres. The NeuroHSI study uses a hyperspectral camera attached to an external scope to show surgeons critical information on tissue blood flow and distinguishes vulnerable structures which need to be protected. The NeuroPPEye study is developing this technology adapted for surgical microscopes, to guide tumour surgery.

Post overview:

- Focus: Translational research in biomedical optics for hyperspectral imaging based quantitative fluorescence linked with an in-patient neurosurgery clinical study

- Line manager: Tom Vercauteren

- Clinical collaborator: King’s College Hospital

- Industry collaborator: Hypervision Surgical

- Salary: Grade 6, £38,826 - £45,649 per annum, including London Weighting Allowance

Recent release of MONAI Label v0.4.0 extends support for multi-label scribbles interactions to enable scribbles-based interactive segmentation methods.

CAI4CAI team member Muhammad Asad contributed to the development, testing and review of features related to scribbles-based interactive segmentation in MONAI Label.

We are actively involving patients and carers to make our research on next generation neurosurgery more relevant and impactful. Early February 2022, our research scientists from King’s College London and King’s College Hospital organised a Patient and Public Involvement (PPI) meeting with support from The Brain Tumour Charity.

Post overview:

- Focus: Translational research on real-time computing for hyperspectral imaging linked with an active neurosurgery clinical study

- Line manager: Tom Vercauteren

- Industry collaborator: Hypervision Surgical

- Clinical collaborator: King’s College Hospital

- Salary: Grade 6, £38,826 - £45,649 per annum, including London Weighting Allowance

Post overview:

- Focus: Translational research on hyperspectral-based quantitative fluorescence imaging linked with a neurosurgery clinical study

- Line manager: Tom Vercauteren

- Clinical collaborator: King’s College Hospital

- Industry collaborator: Hypervision Surgical

- Salary: Grade 6, £38,826 - £45,649 per annum, including London Weighting Allowance

Post overview:

- Focus: Real-time learning-based processing of hyperspectral imaging and its integration in complex robotic systems

- Line manager: Tom Vercauteren

- Related research project: FAROS

- Salary: Grade 6, £38,826 - £45,649 or Grade 7, £46,934 - £50,919 per annum, including London Weighting Allowance

Project overview:

- Title: Exploiting multi-task learning for endoscopic vision in robotic surgery

- First supervisor: Miaojing Shi

- Second supervisor: Tom Vercauteren

- Clinical Supervisor: Asit Arora

- Start date: October 2022

.](/post/2021-06-30-mtlphd/featured_hu_f77423c1c992a9b.webp)

Project summary

Multi-task learning is common in deep learning, where clear evidence shows that jointly learning correlated tasks can improve on individual performances. Notwithstanding, in reality, many tasks are processed independently. The reasons are manifold:

Join us at the IEEE International Ultrasonics Symposium where CAI4CAI members will present their work.

runs 11-16 September 2021.](/post/2021-09-09-ieee-ius/ius2021-logo_simple_hu_fdcd9f3d70f4b206.webp)

Christian Baker will be presenting on “Real-Time Ultrasonic Tracking of an Intraoperative Needle Tip with Integrated Fibre-optic Hydrophone” as part of the Tissue Characterization & Real Time Imaging (AM) poster session.

King’s College London, School of Biomedical Engineering & Imaging Sciences and Moon Surgical announced a new strategic partnership to develop Machine Learning applications for Computer-Assisted Surgery, which aims to strengthen surgical artificial intelligence (AI), data and analytics, and accelerate translation from King’s College London research into clinical usage.

Yijing will develop a 3D functional optical imaging system for guiding brain tumour resection.

She will engineer two emerging modalities, light field and multispectral imaging into a compact device, and develop novel image reconstruction algorithm to produce and display high-dimensional images. The CME fellowship will support her to carry out proof-of-concept studies, start critical new collaborations within and outside the centre. She hopes the award will act as a stepping stone to enable future long-term fellowship and grants, thus to establish an independent research programme.

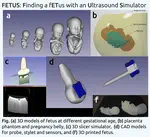

Miguel will collaborate with Fang-Yu Lin and Shu Wang to create activities to engage school students with ultrasound-guidance intervention and fetal medicine. In the FETUS project, they will develop interactive activities with 3D-printed fetus, placenta phantoms as well as the integreation of a simulator that explain principles of needle enhancement of an ultrasound needle tracking system.

A great milestone today for Luis Garcia Peraza Herrera who passed his PhD viva with minor corrections! His thesis is entitled “Deep Learning for Real-time Image Understanding in Endoscopic Vision”.

Thanks to Ben Glocker and Enrico Grisan for their role examining the thesis.

Contact

- 1 Lambeth Palace Road, London, SE1 7EU, UK

- Becket House 9th floor

- Find us on GitHub

- Find us on Vimeo

![PhD opportunity [October 2026 start] on "Learning-based vision system for marker-free external ventricular drain (EVD) neuronavigation"](/post/2026-02-08-markerfreenav-phd/featured_hu_15d415c832907391.webp)

![PhD opportunity [October 2026 start] on "Intelligent deep learning neuroimaging system for guiding brain tumour treatment"](/post/2026-02-07-mdtm-phd/featured_hu_fb970bf70d2da35e.webp)

![PhD opportunity [October 2026 start] on "Immersive visuo-haptic endovascular tele-operation through AI-enabled multimodal semantic telecommunication"](/post/2026-02-06-semantictelco-phd/featured_hu_7a6fe1069b8eab8f.webp)

![PhD opportunity [October 2026 start] on "Language-based agentic collaboration for endovascular acute stroke treatment"](/post/2026-01-19-languageagentstroke-phd/featured_hu_45f4b68c46e14145.webp)

![PhD opportunity [June 2026 start] on "Anatomy localisation in X-ray fluoroscopy videos for mechanical thrombectomy" in collaboration with Telos Health](/post/2025-09-29-mtanatomy-phd/featured_hu_569af4bbad08247b.webp)

![PhD opportunity [October 2026 start] on "Patient-specific CTA-informed in-silico simulation of interventional fluoroscopy: A digital twin for stroke patients"](/post/2024-11-18-fluoroscopydt-phd/featured_hu_7e64a54a9263e9d3.webp)

![PhD opportunity [October 2026 start] on "Real-time intraoperative polarimetric imaging to detect nerves during brain tumour surgery"](/post/2025-09-29-polarimetry-phd/featured_hu_14efa545ae00abca.webp)

![KDC Africa PhD studentship [October 2025 start preferred - no later than June 2026] on "Resource-efficient slice-to-volume MRI super-resolution reconstruction for improved meningioma management in Sub-Saharan Africa"](/post/2025-01-28-mrireconsssa-phd/featured_hu_6243408d2778cc22.webp)

![PhD opportunity [October 2025 start] on "Machine Learning Tool for Predicting Digital Twin Trajectories of Meningioma Growth on MRI Brain Scans"](/post/2024-11-18-meningiomagrowth-phd/featured_hu_614d4b444e84b2fe.webp)

![PhD opportunity [October 2025 start] on "Computational stereovision synthesis from monocular neuroendoscopy"](/post/2024-09-24-monostereo-phd/featured_hu_73f69e9de90cac99.webp)

![PhD opportunity [October 2024 start] on "Text promptable semantic segmentation of volumetric neuroimaging data"](/post/2023-10-04-textpromptseg-phd/featured_hu_74717dac224f3504.webp)

![[Job] Research Coordinator - King's College Hospital NHS Foundation Trust](/post/2022-12-01-researchcoordinator/featured_hu_a65c931746e06099.webp)

![PhD opportunity [February 2024 start] on "Incorporating Expert-consistent Spatial Structure Relationships in Learning-based Brain Parcellation"](/post/2022-11-15-twaiparcellation/featured_hu_11228d5ca49d472d.webp)

![PhD opportunity [February 2024 start] on "Accurate automated quantification of spine evolution — it’s about time!"](/post/2022-11-13-spinequantification/featured_hu_29866223f35c9f14.webp)

![PhD opportunity [February 2024 start] on "Physically-informed learning-based beamforming for multi-transducer ultrasound imaging"](/post/2021-11-03-usbeamforming/featured_hu_1dcd108d7f697969.webp)

, [Martin](/author/martin-huber), [Anisha](/author/anisha-bahl), and [Matt](/author/matthew-elliot).](/post/2022-05-farosintegrationweek/cai4caiatbalgrist_hu_db6c6100e82586a7.webp)

![PhD opportunity [June 2022 start] on "Computational approaches for quantitative fluorescence-guided neurosurgery"](/post/2022-03-24-qfhsiphd/featured_hu_fb288d650a2c9fe9.webp)

![[CfP] MedIA Special Issue on Explainable and Generalizable Deep Learning Methods for Medical Image Computing](/post/2021-05-24-media-si/featured_hu_934c0f60f8842ad6.webp)